How Can We Maximize What We Learn from Science?Scientists are people, and considerable research in the behavioral sciences shows that people often opt for quick heuristics or simple decision rules. Especially when time is short, people often prefer to rely on familiar mental shortcuts instead of sifting through large amounts of complex information. But of course, the quick and easy answer can often lead us astray. What's more, existing scientific systems and default assumptions tend to be closed and exclusive rather than open and inclusive, which fundamentally limits the questions we ask and the answers we find. So how do we build systems of conducting and communicating about science that are truly open and inclusive and that help counteract individual and systemic biases?

|

Making Science More Open and Inclusive

Psychological science, like many other disciplines, was originally set up to be by, for, and about a very narrow subset of people (namely affluent, White, cis men) and their perspectives. Our scientific culture and default practices are therefore inherently closed and exclusive, which restricts and contorts our science in a number of ways. Change is urgently needed to make science more open and inclusive, and successful change will require that individual scientists intervene at every level of the system, from labs and departments to journals and scientific societies. Some of our recent collaborative work sets out concrete tools for scientists looking to effect change in their own roles and spaces.

CLICK THE LINKS TO READ MORE:

- Ledgerwood, A., Lawson, K. M., Kraus, M. W., Vollhardt, J. R., Remedios, J. D., Westberg, D. W., Uskul, A. K., Adetula, A., Leach, C. W., Martinez, J. E., Naumann, L. P., Reddy, G., Tate, C., Todd, A. R., Weltzien, K., Buchanan, N. T., González, R., Montilla Doble, L. J., Romero-Canyas, R., Westgate, E., & Zou, L. X. (in press). Disrupting racism and global exclusion in academic publishing: Recommendations and resources for authors, reviewers, and editors. Collabra: Psychology. [preprint]

- Ledgerwood, A.,* Hudson, S. T. J.,* Lewis, N.A., Jr.,* Maddox, K. B.,* Pickett, C. L.,* Remedios, J. D.,* Cheryan, S.,* Diekman, A. B.,* Dutra, N. B., Goh, J. X., Goodwin, S. A., Munakata, Y., Navarro, D. J., Onyeador, I. N., Srivastava, S., & Wilkins, C. L. (2022). The pandemic as a portal: Reimagining psychological science as truly open and inclusive. Perspectives on Psychological Science. *Co-first authors. [preprint] [published version]

- Ledgerwood, A., da Silva Frost, A., Kadirvel, S., Maitner, A., Wang, Y. A., & Maddox, K. B. (in press). Methods for advancing an open, replicable, and inclusive science of social cognition. Chapter to appear in in K. Hugenberg, K. Johnson, & D. E. Carlston (Eds), The Oxford Handbook of Social Cognition. [preprint]

- Ledgerwood, A., Pickett, C., Navarro, D., Remedios, J. D., & Lewis, N. A., Jr. (2022). The unbearable limitations of solo science: Team science as a path for more rigorous and relevant research. Behavioral and Brain Sciences, 45, E81. [preprint] [published version]

Thinking Deeply across the Research Cycle

Other methodological work from our lab focuses on synthesizing new developments in research methods that promote careful thinking across the research cycle, from study design to data analysis to aggregating across multiple studies using cutting-edge meta-analytic techniques. These resources seek to provide readers with concrete methodological and statistical tools they can use in their own research, with a focus on discussing why, when, and how to implement each tool.

CLICK THE LINKS TO READ MORE:

- Da Silva Frost, A., & Ledgerwood, A. (2020). Calibrate your confidence in research findings: A tutorial on improving research methods and practices. Journal of Pacific Rim Psychology.

- Ledgerwood, A. (2019). New developments in research methods. In E. J. Finkel & R. F. Baumeister (Eds.), Advanced Social Psychology (pp. 39-61). Oxford University Press.

- Ledgerwood, A., Soderberg, C. K., & Sparks, J. (2017). Designing a study to maximize informational value. In J. Plucker & M. Makel (Eds.), Toward a more perfect psychology: Improving trust, accuracy, and transparency in research (pp. 33-58). Washington, DC: American Psychological Association.

- Ledgerwood, A. (2016). Introduction to the special section on improving research practices: Thinking deeply across the research cycle. Perspectives on Psychological Science, 11, 661-663.

- Ledgerwood, A. (2016). The Start-Local Approach. Talk Presented at the Training Preconference of the 2016 Annual Convention of the Society for Personality and Social Psychology.

- Ledgerwood, A. (2014). Introduction to the special section on moving toward a cumulative science: Maximizing what our research can tell us. Perspectives on Psychological Science, 9, 610-611.

- Ledgerwood, A. (2014). Introduction to the special section on advancing our methods and practices. Perspectives on Psychological Science, 9, 275-277.

Modeling Tradeoffs to Identify Optimal Research Strategies:

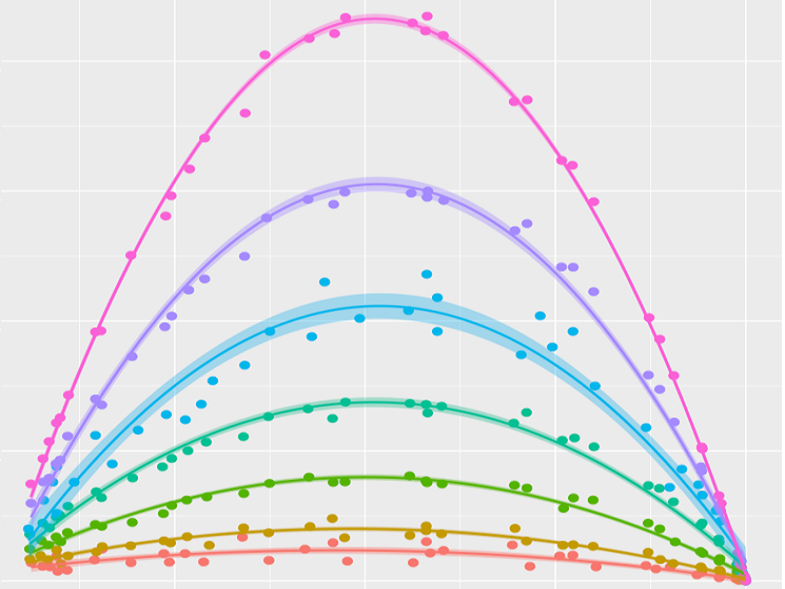

The Case of the Unplanned Covariate

Once viewed as a statistical power-boosting hero, covariates have been recently recast in a negative light as concerns emerged about the possibility that they can inflate Type I error rates when used in a particular way. We conducted a Monte Carlo simulation study designed to quantify the Type I/Type II error tradeoff associated with including an unplanned, independent covariate in an experimental design. By varying the effect size under investigation, the correlation between the covariate and DV, and the particular analytic strategy modeled in our simulations, we were able to identify strategies that are particularly terrible (e.g., ones that do virtually nothing to boost power while allowing Type I error to inflate dramatically) as well as strategies that are particularly useful (e.g., ones that provide a substantial power boost while inflating Type I error only a little or not at all).

Latent Variables Boost Accuracy at the Cost of Precision

In all of our research, we pay particular attention to the appropriateness of various methods and practices and what can be gained (or lost) from choosing one alternative over another. For instance, social psychologists often seek to shed light on the basic process underlying an effect by testing for mediation. A simple three-variable mediation model can be analyzed either with a typical regression approach, or with structural equation modeling (SEM) using latent variables. Which is the better option? This is a more complex and consequential question than researchers often realize.

On the one hand, statisticians often recommend a SEM approach because it tends to produce more accurate estimates. (Regression tends to give inaccurate estimates that are often too small, because they have been attenuated by measurement error.) On the other hand, our work has shown that a regression approach tends to produce more precise estimates than a SEM approach, with smaller standard errors and therefore increased power to detect an effect in the first place.

On the one hand, statisticians often recommend a SEM approach because it tends to produce more accurate estimates. (Regression tends to give inaccurate estimates that are often too small, because they have been attenuated by measurement error.) On the other hand, our work has shown that a regression approach tends to produce more precise estimates than a SEM approach, with smaller standard errors and therefore increased power to detect an effect in the first place.

|

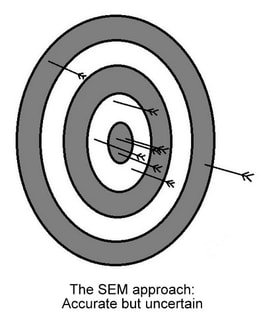

This means that SEM estimates are correctly centered (i.e., accurate)

but also widely scattered (imprecise, and therefore less likely to be significant). Imagine that each study is like a dart thrown at a dartboard. Over time (that is, across many studies), a SEM approach will get the darts to converge on the bulls-eye—the real strength of the relation between variables in the population—but there will be a lot of variability in where exactly each individual dart ends up. The estimate from any individual study might be off by quite a bit, but the meta-analytic average would be accurate (that is, it would recover the true population parameter). |

|

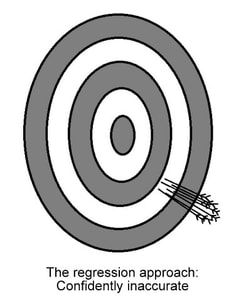

In contrast, regression estimates are incorrectly centered (inaccurate, and often far too small) but tightly clustered (precise, and therefore more likely to be significant). Because there’s less variability in where the darts end up, the results of a single study are more likely to be significant—so this approach is good at telling us if there is an effect in the first place. But because the darts are nowhere near the bulls-eye, we’ll get the wrong idea about how big that effect actually is...even when aggregating across studies in a meta-analysis. |

Is there a happy medium? Researchers can maximize both accuracy and precision by investing in reliable measures and by planning mediation studies with adequate power. But when highly reliable measures aren’t feasible, a two-step strategy for testing and estimating the indirect effect in a mediation model may be the best approach. We recommend using observed variables to test the indirect effect for significance (e.g., using regression and a bootstrapped SE), and then estimating the path coefficient for the indirect effect using latent variables in SEM.